Note

This is my entry for 3blue1brown’s first summer of math exposition (SoME1). I’m glad to report it was one of the top 100/1200+ entries and that Grant Sanderson ranked it as one of his favorite written entries!

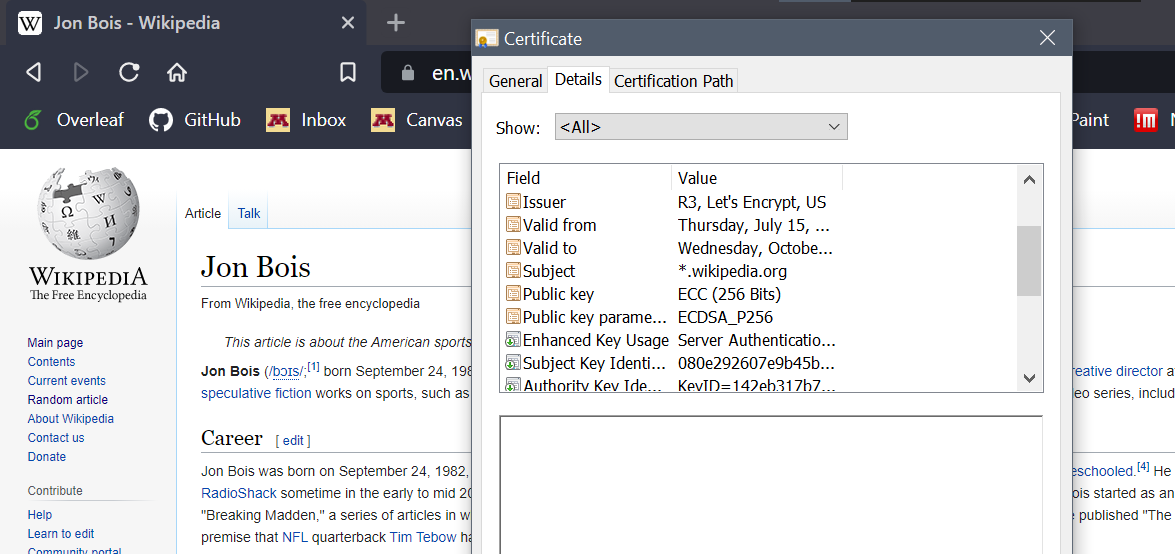

There’s no question that elliptic curves are incredibly important to math and cryptography.

|

|---|

| Elliptic curve cryptography spotted in Wikipedia’s security certificate |

It was the key to Andrew Wiles’s famous proof of Fermat’s Last Theorem. It was even used to fill a void in one of M. C. Escher’s artworks.

But its most creative use, in my opinion, was its application in my favorite algorithm. Strangely enough, it’s an algorithm literally built to fail. My goal is that this blog post reads like a combination of an engaging textbook and a mystery novel. By the end, I hope to give you the feeling that you could have invented this algorithm yourself by piecing together the title of this post and what you’ve learned.

By the way, the algorithm is a factoring algorithm. The goal is, given an integer, find an equivalent expression that’s a product of other integers.

|

|---|

| A pretty good example |

What are elliptic curves?

Elliptic curves consist of the solutions to

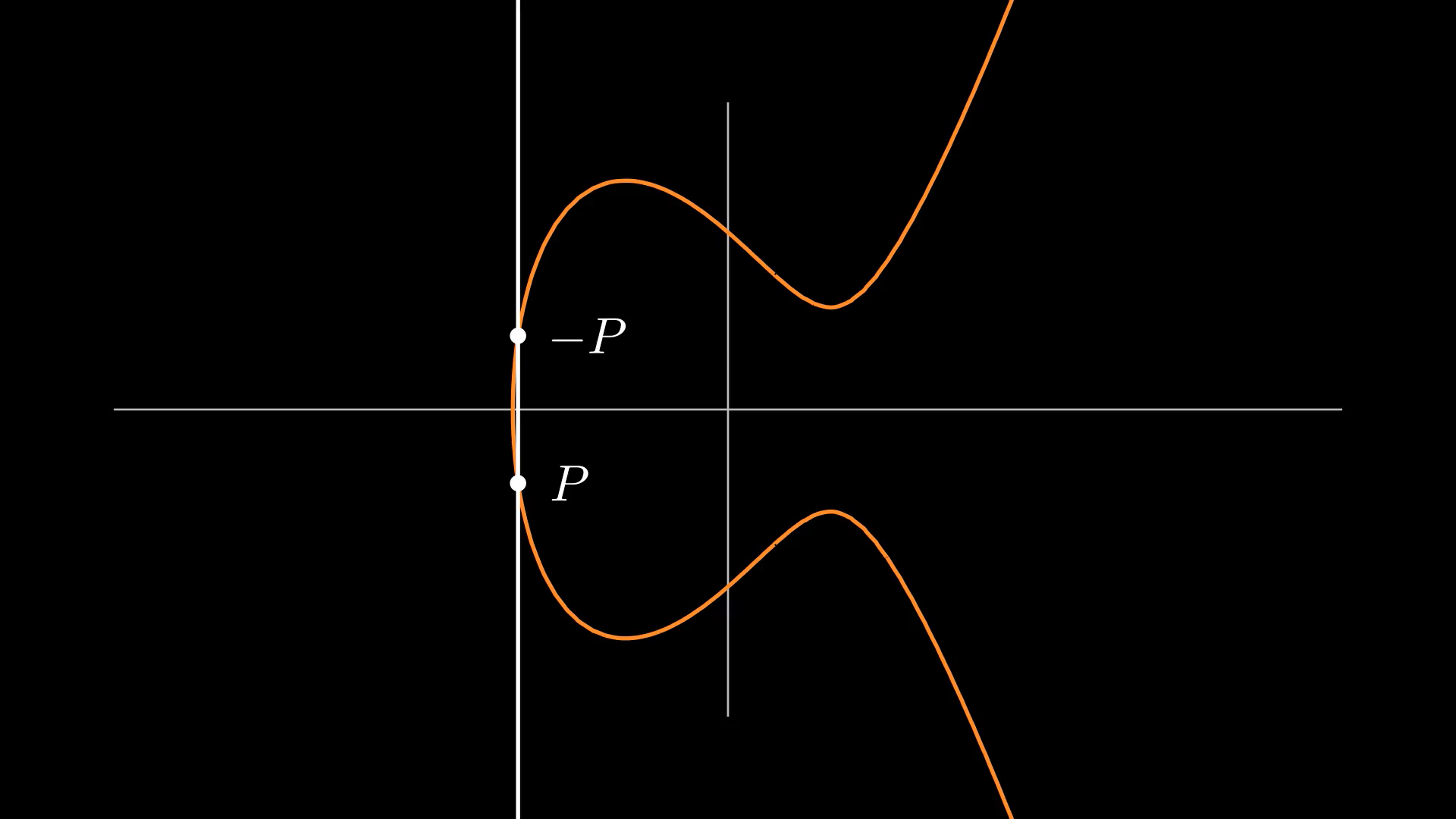

|

|---|

| Some choices of and result in the graph having two components. |

What’s interesting about elliptic curves for this algorithm is that it has

Group structure

Roughly speaking, we say that a set has a group structure if we can define an operation on all its elements which behaves like addition. That is, for a set and , an operation on , if

- For all , , in , we have

- There exists in such that, for every in , we have . is known as the identity element of

- For each in , there exists a unique in such that . is known as the inverse of ,

then has group structure.

In the case of elliptic curves, the elements are the set of the points and the operation of choice involves first drawing a line through the points. The third point that the line passes through is denoted as the inverse of the sum. Since elliptic curves are symmetric, the inverse can be inverted by reflecting across the -axis to recover the sum.

| Point addition for the usual case of two distinct points |

Question

If you were confused why we have to invert the third intersection previously, read the following paragraph then consider the above video animating . Rearranging this results in . If we did not invert the third intersection, does this equation still hold?

In the case of adding a point to its inverse, there’s no third intersection between the line and the curve. This leads us to define the nonexistent point ‘at infinity’ to be the identity element (denoted ), since its sum with any other point can be defined as simply the other point, in addition to being the sum of inverses.

| Point at infinity not shown |

Last is the case where we add a point to itself.

| The drawn line is tangent to the elliptic curve |

With this definition of the addition operation on the curve, we can formulate an algorithm that takes in point coordinates and returns the coordinates of the sum of the points.

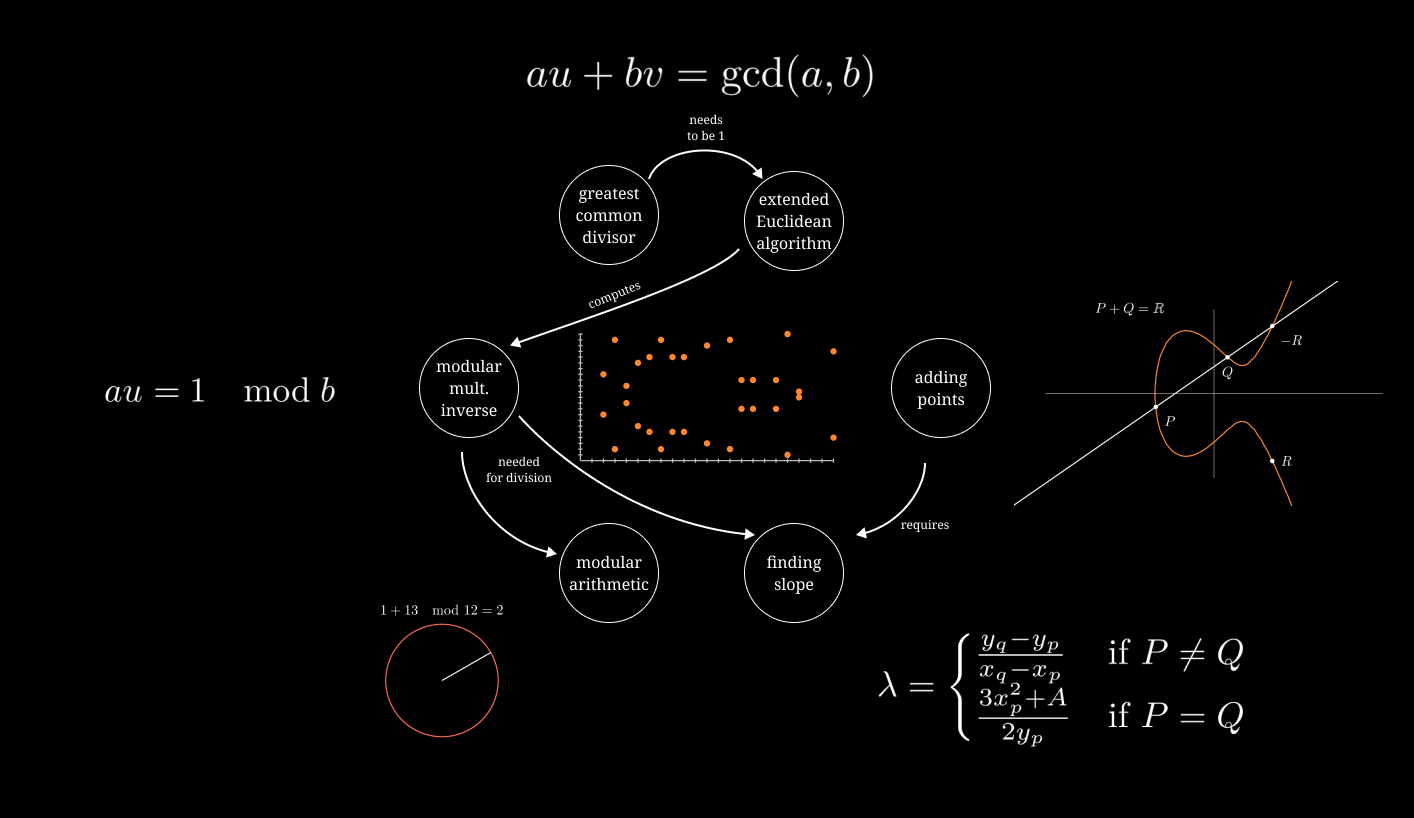

Elliptic curve addition algorithm

Given the curve

and points and ,

- If , then

- If , then

- If and , then . We have an undefined slope from dividing by zero, as and have the same -coordinate, resulting in a vertical line to the identity element at infinity.

- Let the slope of the line through and be

The first case is the standard rise-over-run calculation of slope. The second is the slope of the tangent line computed via implicit differentiation.

Either way, we have our line

where is the -intercept needed to pass through .

We plug this expression of into the elliptic curve equation to begin solving for the third intersection:

Expanding, rearranging, and grouping terms gives

Remember, was written using both the line equation and the elliptic curve equation, meaning any solution to the previous equation represents an intersection between the two. So if the third intersection is ,

Notice the coefficients imply . We can rearrange this into and plug into the tangent line equation to find the corresponding -coordinate.

Finally, we invert the third intersection coordinates to get our answer: .

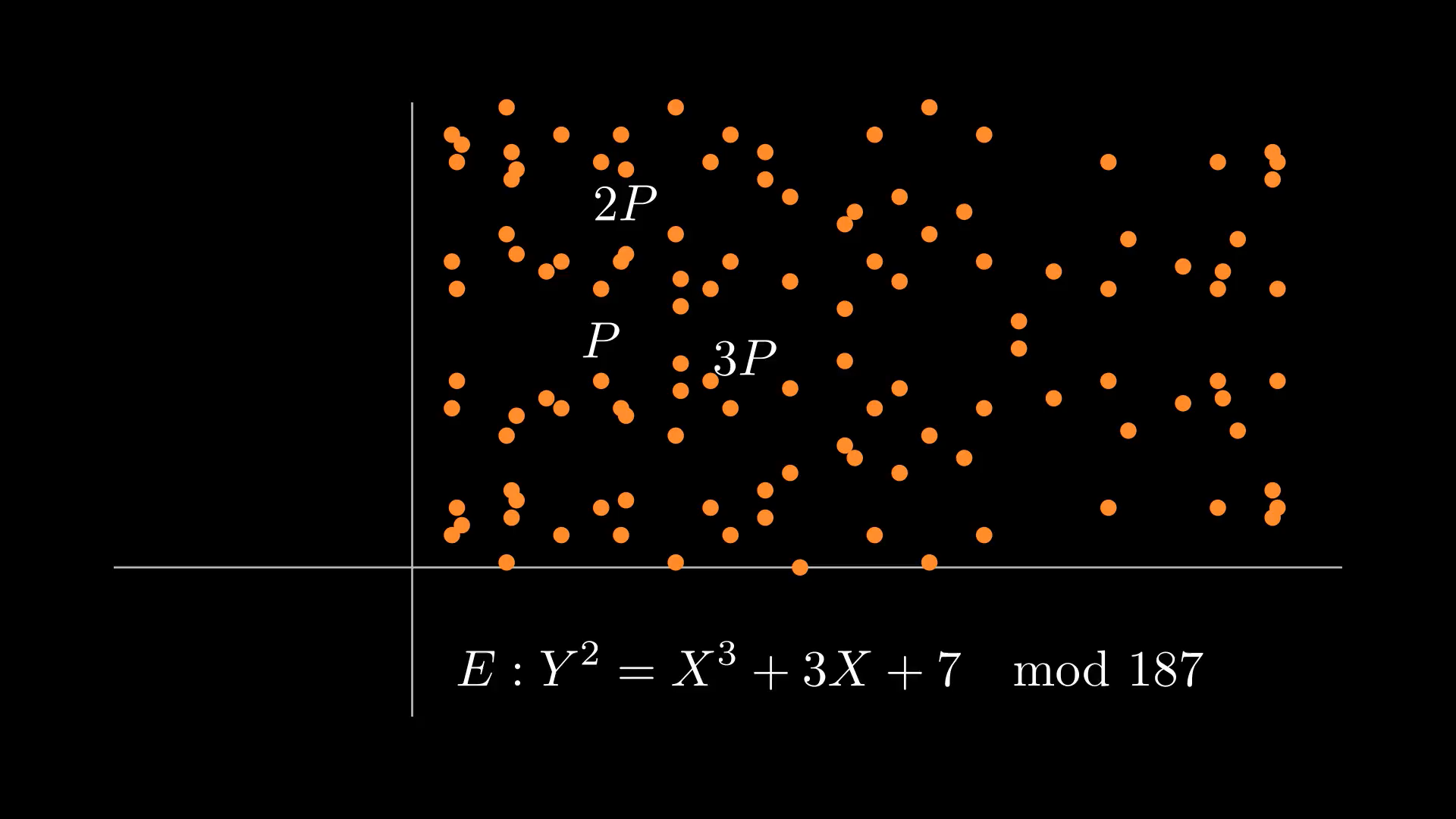

The other key detail besides the group structure of elliptic curves to consider for this algorithm is considering the curves under

Modular arithmetic

|

|---|

| Modular arithmetic works on integers making the elliptic curve look unrecognizable |

Modular arithmetic refers to the system of operating on the integers where numbers ‘wrap around’ when reaching a critical value called the modulus. In modular arithmetic, we may take expressions ‘modulo’ or ‘mod’ the modulus, meaning that we take the remainder after dividing by the modulus.

More formally, if we say that divides , then there exists another integer where . Then in modular arithmetic, we say that (’ is congruent to mod ’) if divides .

For us to continue to be able to add points elliptic curves under modular arithmetic, we need to see the modular equivalents to operations in the procedure:

Addition, subtraction, multiplication, and division.

Shown below is modular addition as well as modular subtraction.

|  |

Multiplication behaves well under modular arithmetic thanks to the useful property that multiplying then ing is equivalent to ing then multiplying:

But modular division is slightly more complicated. It requires finding a number called the modular multiplicative inverse. In regular arithmetic, if we wanted to divide by 5, we could just multiply by one fifth---its reciprocal, the number to multiply to get 1. Here in modular arithmetic land, we’re going to use the extended Euclidean algorithm to find the modular multiplicative inverse and make modular division possible.

Extended Euclidean algorithm

Given integers and , the extended Euclidean algorithm finds solutions to

where is the greatest common divisor (GCD) of and , the largest number that divides both numbers of interest evenly. and are known as the Bézout coefficients of and . When and are coprime (their is 1), these coefficients will give us the modular multiplicative inverse we seek.

Let’s see how it works with an example with and . First, we compute using the regular, non-extended, Euclidean algorithm. You simply store remainder, divide, and repeat.

Each step after

is in the general form

The remainders are non-increasing. Since we know that the last remainder is 0, evenly divides . also divides

We can continue this pattern all the way back up to show that divides and like a GCD should.

Next, we work backwards to find and from .

So we have

By definition, is the modular multiplicative inverse of ; when multiplied with it is congruent to . In this case, -27 is the modular multiplicative inverse of 21 modulo 71.

Notice that had to be equal to 1 for this to work. Similar to regular arithmetic needing a number to be non-zero for division, modular arithmetic also has a condition for division to be defined.

Recap

At this point, you have all you need to be able to come up with a factoring algorithm using elliptic curves modulo a number. Given the context of everything you’ve learned, can you come up with an algorithm?

|

|---|

| Goals: factor a number, fail along the way |

Consider the title of this post as your final hint.

The reveal

|

|---|

| Pencil and paper ready! Can you find where division fails when we add to in the curve? Actually try it! |

The key is to consider the scenario where is not 1. That would mean the extended Euclidean algorithm fails to compute a modular multiplicative inverse. We fail to divide when calculating the slope and it is undefined. If , then is a number we can use to factor or , since it divides both. Our failure to divide in trying to compute a slope while adding points on the elliptic curve together results in finding a nontrivial factor! So an algorithm to factor can be trying many different curves and many different points until we encounter this scenario.

Note

In the above example, division fails when we add to because is equal to the point at infinity on the elliptic curve. This is because we try to divide by zero! In the world of modular arithmetic, we’ve attempted to find the multiplicative inverse of a number that’s divisible by the modulus.

|

|---|

| Undefined slope, but due to failure to divide from dividing by zero, not |

Now our algorithm just needs

- a way to generate elliptic curves modulo the number to be factored

- a way to quickly add many points.

We can accomplish both of these by picking the coordinates of a point, defining an elliptic curve such that it contains that point, and adding that point to itself repeatedly (computing its multiples).

If we choose , then pick a random , we have

when we evaluate the curve at . In order for to be a solution of the curve, we must have to satisfy the equation.

|

|---|

| Choosing random elliptic curves |

Note

In reality, it is faster to compute in the th step of the algorithm than to repeatedly add a point to itself. This is accomplished by the double-and-add algorithm, which I will cover in the sequel post.

Conclusion

I hope you were able to reinvent this algorithm—Lenstra’s elliptic curve factorization algorithm—before its reveal.

It’s intriguing that the algorithm doesn’t just fail, but accomplishes its goal as a result of failing to compute a value. If only math tests were like that!

Info

For part two of this series on Lenstra’s algorithm, see the next post for how Python exceptions can be used to write what might be the most intuitive implementation of the algorithm.

Anticipated questions

Why do we expect adding a point to itself repeatedly eventually become the point at infinity from dividing by zero?

- You should learn about Lagrange’s theorem for more details than I will discuss here. When you have a group with finitely many elements like we do with elliptic curves modulo a number, then computing the multiples of an element by repeatedly adding it to itself will generate new points in the group, including the identity element. If you add one more multiple to the identity element, then we will be back where we started. Thus, mathematicians call the set of elements generated by doing this a cyclic subgroup of the original group. We expect this to happen because of the point’s inclusion in a cyclic subgroup:

Who made this wonderful algorithm?

- Hendrik Lenstra, a Dutch mathematician. Three of his brothers are also mathematicians, and he actually was the previously mentioned one who once used elliptic curves to fill the void in M. C. Escher’s Print Gallery.

How do we know that finding a factor is likely? From the last note, why is computing the factorial of a number multiples of a point instead of just adding a point to itself many times faster?

- I didn’t want to drag on an already long blog post with talking about finite fields, but the answer requires knowing about them. They are algebraic objects like groups that are defined with prime numbers. Essentially, if we are trying to factor which has prime factor using point , then if we look at the elliptic curve over the finite field defined by , for some number . Getting a division failure that we want from the elliptic curve will happen when we compute where is some multiple of . Thus, computing a factorial multiple of will help us get there quickly. The size of the finite field depends on ; so if is small, then a smaller factorial multiple will get us our desired division failure. Read this for more details.

Is this algorithm the fastest factoring algorithm? Is this algorithm actually important compared to other factoring algorithms?

- For general-purpose factoring, Lenstra’s algorithm is the third fastest algorithm. However, it is still in active use because how long it takes to factor does not depend on the size of the number to factor, but upon the size of its smallest factor as seen in the previous question. This makes it the fastest algorithm for large numbers which have small factors. For this reason, many factoring programs use Lenstra’s algorithm to start, then switch to more generally faster algorithms, like the quadratic or general number field sieves. Even without this utility, the algorithm is still relevant historically as it helped introduce elliptic curves to the cryptographic community, where they are now widely used.

Can I ask you a question personally?

- Yes, please email me with any questions.

Where is the source code for the animations?

- You can view the source code here. Apologies in advance for the messy code.

Acknowledgments

I learned this from Professor Kai-wen Lan’s MATH 5248 course at the University of Minnesota and its textbook An Introduction to Mathematical Cryptography by Hoffstein, Pipher and Silverman.

Animations are made with ManimCE and illustrations with Figma.